Turning Fortnite into PUBG with Deep Learning (CycleGAN)

Understanding CycleGAN for Image Style Transfer and exploring its application to graphics mods for games.

If you are a gamer, you must have heard of the two insanely popular Battle Royale games out right now, Fortnite and PUBG. They are two very similar games in which 100 players duke it out on a small island until there is just one survivor remaining. I like the gameplay of Fortnite but tend to prefer the more realistic visuals of PUBG. This got me thinking, can we have graphics mods for games that can allow us to choose the visual effects of our liking without having to rely on the game developers providing us that option? What if a mod was available that could render the frames of Fortnite in the visuals of PUBG? That’s where I decided to explore if Deep Learning could help and I came across neural networks called CycleGANs that happen to be very good at image style transfer. In this article, I’ll go over how CycleGANs work and then train them to visually convert Fortnite into PUBG.

What are CycleGANs?

CycleGANs are a type of Generative Adversarial Network used for cross-domain image style transfer. They can be trained to convert images of one domain, like Fortnite, into another domain, like PUBG. This task is performed in an unsupervised manner, i.e., there is no one-to-one mapping of images from both these domains.

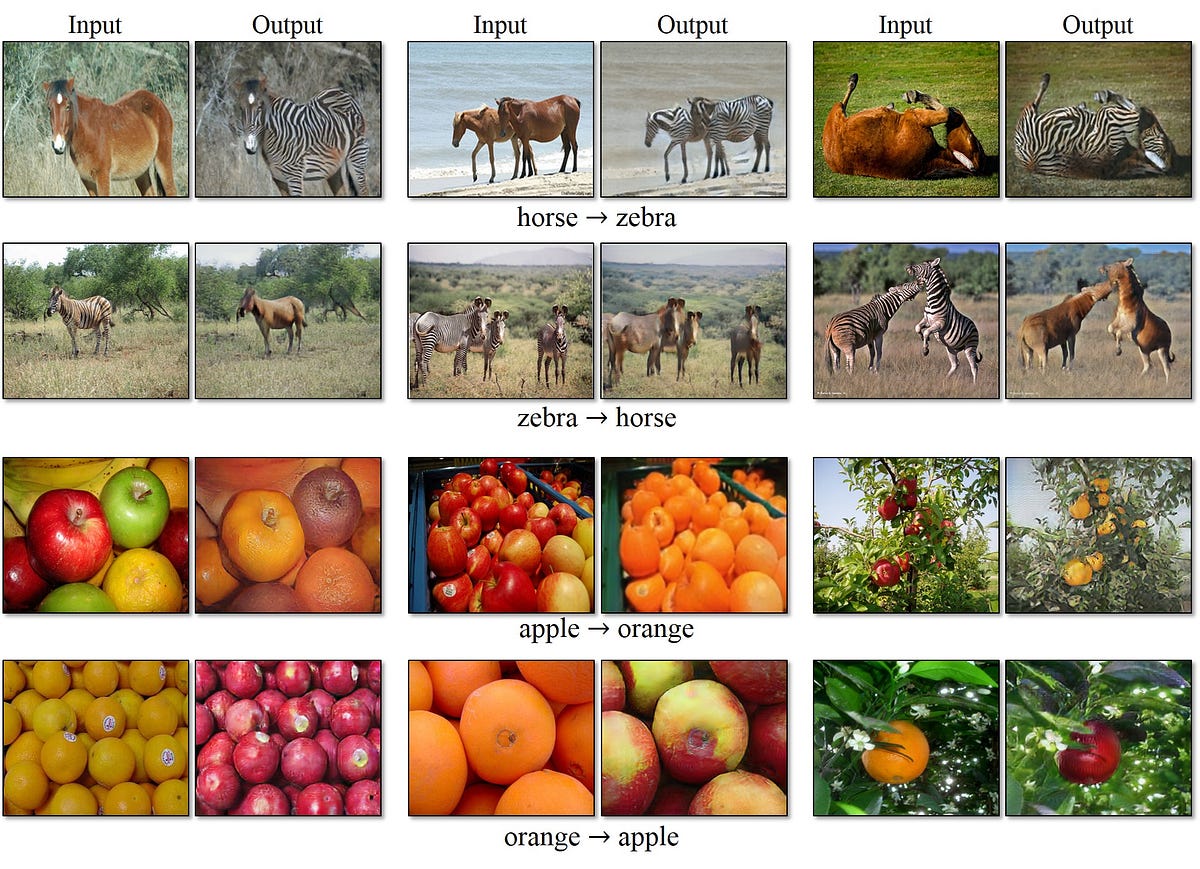

The network is able to understand objects in the images of original domain and apply necessary transformations to match the same object’s appearance in the images of the target domain. The original implementation of this algorithm was trained to convert horses into zebras, apples into oranges and photos into paintings with amazing results.

How do they work?

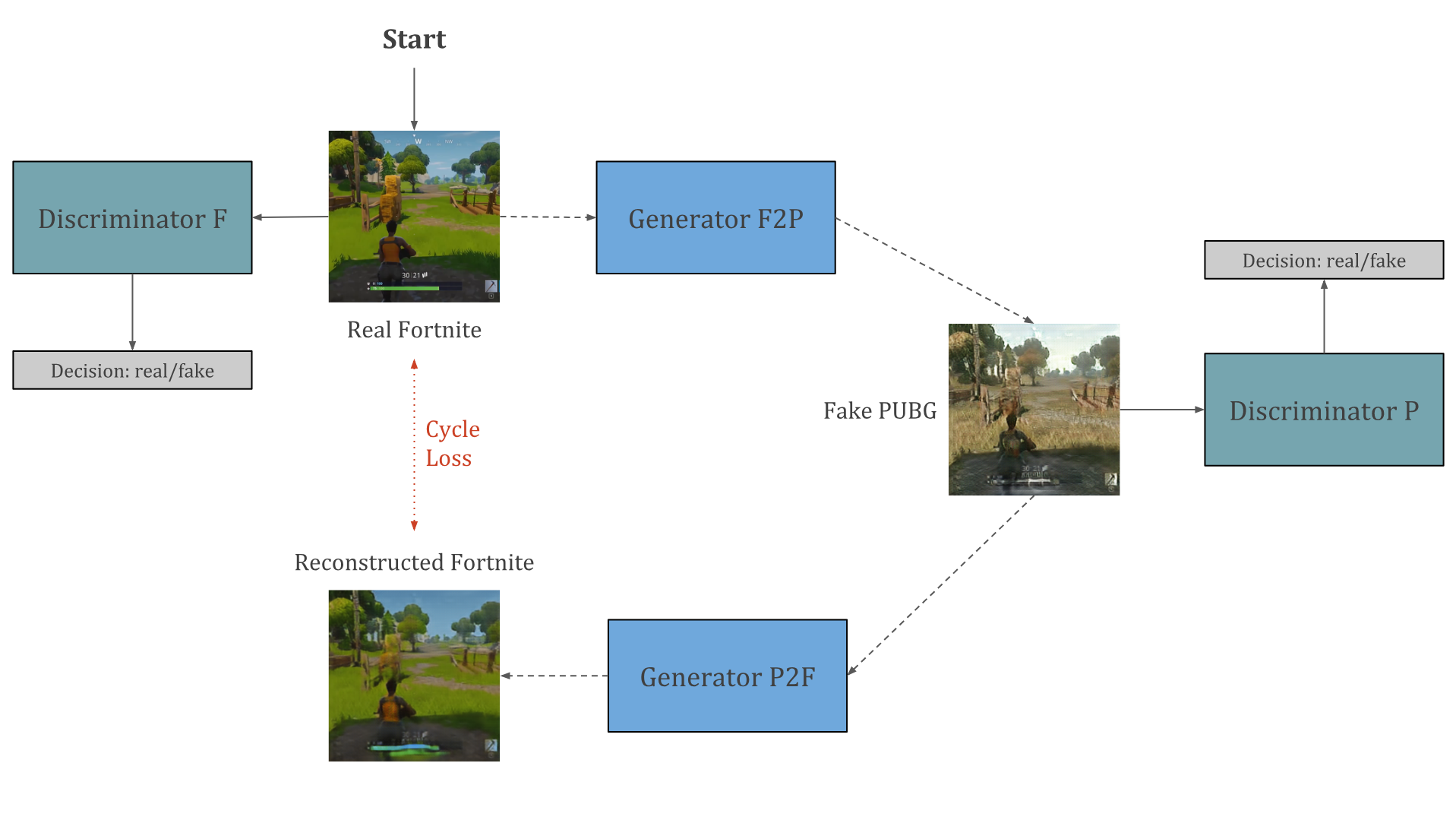

Let us try to understand how CycleGANs work using the example of Fortnite as our input domain and PUBG as our target domain. Using a lot of screenshots of both the games, we train a pair of Generative Adversarial Networks, with one network learning the visual styling of Fortnite and the other of PUBG. These two networks are trained simultaneously in a cyclic fashion so that they learn to form relations between same objects in both the games and thereby make appropriate visual transformations. The following figure shows the general architecture of the cyclic setup of these two networks.

We start the training process by taking the original image from Fortnite. We will train two deep networks, one generator and one discriminator. The discriminator will learn over time to distinguish between real and fake images of Fortnite. The generator will be trained to convert the input image from original domain to target domain using random screenshots of PUBG from the training set.

In order to make sure this transformation is meaningful, we enforce a condition of reconstruction. This means we simultaneously train another set of generator/discriminator that reconstructs image in original domain from the fake domain. We enforce the condition that this reconstruction must be similar to the original image, giving us a value of cycle loss that we aim to minimize in the training process. This is similar to autoencoders, except that we are not looking for an encoding in a latent space for middle step, but an entire image in our target domain .

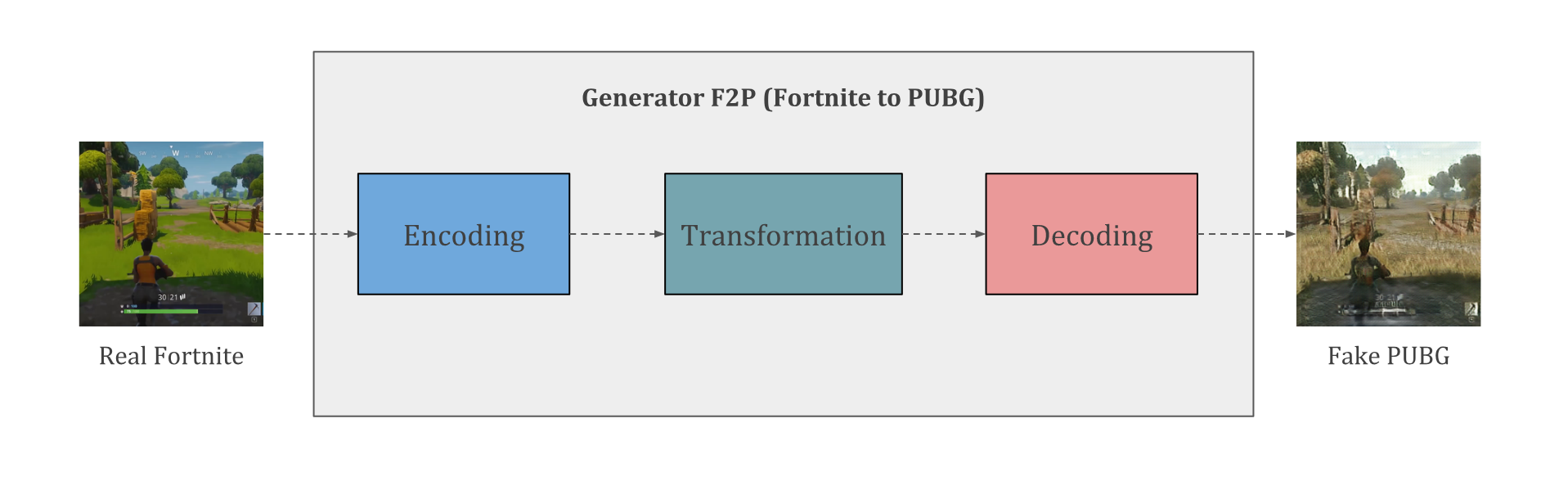

The generator network (F2P) used here is made up of three major convolution blocks. The first one finds an encoding of Fortnite screenshot in a lower dimensional latent space. This encoding is transformed into an encoding that represents PUBG in that same latent space. The decoder then constructs the output image from the transformed encoding, giving us the image of Fortnite that looks like PUBG.

One limitation I faced during this training process was that I could only work with 256x256 images due to GPU memory limitations. This significantly affects the results, but if you have more video memory than 8gb, you could try to generate up to 512x512 images. If you are able to, please let me know here!

Results

The generated images from CycleGAN after 12 hours of training seem very promising. The network was able to successfully convert colors of the sky, the trees and the grass from Fortnite to that of PUBG. The over-saturated colors of Fortnite were transformed into the more realistic colors of PUBG.

The sky looks less bluish and the cartoonish greens of the grass and the trees look much closer to those seen in PUBG. It even learnt to replace the health meter at the bottom of the screen with the gun and ammo indicator of PUBG! What it was unable to link in the two domains was the appearance of the player, which is why the pixels around it are kind-of blurry. Overall, the network did a decent job of identifying objects across the two domains and transforming their appearance.

To view longer results, please view the video embedded below. If you like what you see, don’t forget to click here and subscribe to my YouTube channel!

Application to graphic mods in games

While the results look really good to me, it is clear that we still have a long way to go before I can actually play Fortnite with PUBG graphics. But once we are able to generate higher resolution images in real time using these networks, it could become possible in the future to build graphic mod engines for games without having to rely on the game developers. We could use the visual style of a game of our liking and apply it to any other game!

No comments:

Post a Comment